Conventional wisdom says, "If you don't want your data to be used, opt out of everything."

We say: "If your data is being collected anyway, it makes more sense to influence how it is used."

It's not, "Should companies have my data?" (They already do).

The real question is: "Should my data help build better AI for everyone?"

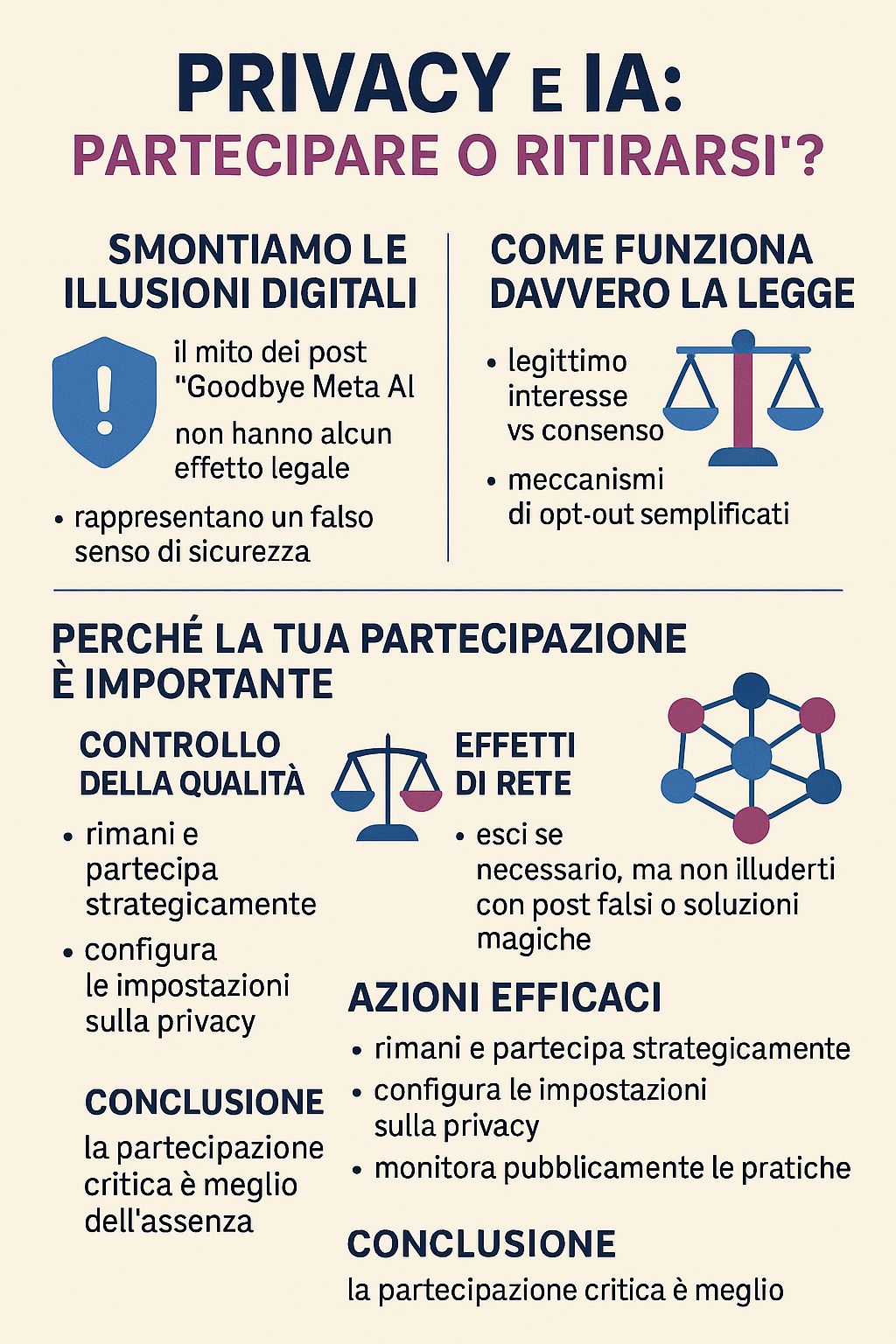

Before building a serious argument, it is essential to debunk a dangerous illusion circulating on social media: the viral "Goodbye Meta AI" posts that promise to protect your data simply by sharing a message.

The uncomfortable truth: these posts are completely fake and can make you more vulnerable.

As Meta itself explains, "sharing the message 'Goodbye Meta AI' does not constitute a valid form of opposition." These posts:

The viral success of these posts reveals a deeper problem: we prefer simple, illusory solutions to complex, informed decisions. Sharing a post makes us feel active without requiring the effort of truly understanding how our digital rights work.

But privacy cannot be defended with memes. It is defended with knowledge and conscious action.

As of May 31, 2025, Meta has implemented a new regime for AI training using "legitimate interest" as the legal basis instead of consent. This is not a loophole, but a legal tool provided for by the GDPR.

Legitimate interest allows companies to process data without explicit consent if they can demonstrate that their interest does not override the user's rights. This creates a gray area where companies "tailor the law" through internal assessments.

The use of non-anonymized data entails "high risks of model inversion, memorization leaks, and extraction vulnerabilities." The computational power required means that only actors with very high capacity can effectively exploit this data, creating systemic asymmetries between citizens and large corporations.

Now that we have clarified the legal and technical realities, let's build the case for strategic participation.

When conscious people opt out, AI trains on those who remain. Do you want AI systems to be based primarily on data from people who:

Bias in AI occurs when training data is not representative. Your participation helps ensure:

AI systems improve with scale and diversity:

If you use AI-powered features (search, translation, recommendations, accessibility tools), your participation helps improve them for everyone, including future users who need them most.

Your privacy does not change significantly between opting in and opting out for AI. The same data already feeds:

The difference is whether this data also contributes to improving AI for everyone or only serves the immediate commercial interests of the platform.

This is exactly why responsible people like you should participate. Withdrawing does not stop the development of AI, it simply removes your voice from it.

AI systems will be developed regardless. The question is: with or without the contribution of people who think critically about these issues?

Understandable. But consider this: would you prefer AI systems to be built with or without the input of people who share your skepticism toward large corporations?

Your distrust is precisely why your critical participation is valuable.

Artificial intelligence is becoming a reality, whether you participate or not.

Your choice is not whether AI will be built, but whether the AI that will be built will reflect the values and perspectives of people who think carefully about these issues.

Opting out is like not voting. It doesn't stop the election; it just means that the result won't take your contribution into account.

In a world where only actors with extremely high computational capabilities can interpret and effectively exploit this data, your critical voice in training can have more impact than your absence.

Stay and participate strategically if:

And in the meantime:

But don't fool yourself with:

Your individual opt-out has minimal impact on your privacy, but staying in has a real impact on everyone.

In a world where AI systems will determine the flow of information, decisions, and interactions between people and technology, the question is not whether these systems should exist, but whether they should include the perspective of thoughtful and critical people like you.

Sometimes, the most radical action is not to give up. Often, the most radical way is to stay and make sure your voice is heard.

Anonymous

It's not about blindly trusting companies or ignoring privacy concerns. It's about recognizing that privacy isn't defended with memes, but with strategic and informed participation.

In an ecosystem where power asymmetries are enormous, your critical voice in AI training can have more impact than your protesting absence.

Whatever your choice, choose with awareness, not with digital illusions.

A word of sympathy also for the "privacy hermits" —those pure souls who believe they can completely escape digital tracking by living offline like Tibetan monks in 2025.

Spoiler alert: even if you go and live in a remote cabin in the Dolomites, your data is already everywhere. Your primary care physician uses digital systems. The bank where you keep your savings to buy firewood tracks every transaction. The village supermarket has cameras and electronic payment systems. Even the postman who delivers your bills contributes to logistics datasets that feed optimization algorithms.

Total digital hermitage in 2025 essentially means excluding yourself from civil society. You can give up Instagram, but you cannot give up the healthcare, banking, education, or employment systems without dramatic consequences on your quality of life.

And while you build your anti-5G hut, your data continues to exist in the databases of hospitals, banks, insurance companies, municipalities, and tax agencies, and is still being used to train systems that will influence future generations.

The hermit paradox: your protest-driven isolation does not prevent AI systems from being trained on data from less aware individuals, but it excludes you from the possibility of influencing their development in more ethical directions.

Essentially, you have achieved the untainted moral purity of someone who observes history from the sidelines, while others—less enlightened but more present—write the rules of the game.

Whatever your choice, choose with awareness, not with digital illusions.

Articles cited:

Further information on GDPR and legitimate interest:

Official resources:

For concrete action: if you are in Europe, check the official opt-out procedures with the Privacy Guarantor. For general information, consult the privacy settings and terms of service of your platform. And remember: no social media post has legal value.