In recent months, the artificial intelligence community has been through a heated debate sparked by two influential research papers published by apple. The first, illusion-of-thinking-the-debate-that-is-shaking-the-world-at-ai&_bhlid=a540c17e5de7c2723906dabd9b8f31cdf0c5bf18" target="_blank" id="">"GSM-Symbolic" (October 2024), and the second, "The Illusion of Thinking" (June 2025), questioned the alleged reasoning capabilities of Large Language Models, triggering mixed reactions across the industry.

As already analyzed in our previous in-depth study on "The Illusion of Progress: Simulating General Artificial Intelligence Without Achieving It", the question of artificial reasoning touches on the very heart of what we consider intelligence in machines.

Apple researchers conducted a systematic analysis on Large Reasoning Models (LRM) -those models that generate detailed reasoning traces before providing an answer. The results were surprising and, for many, alarming.

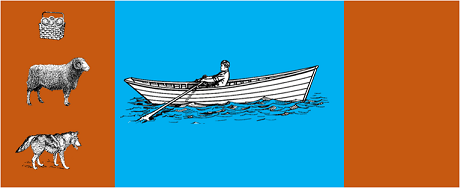

The study subjected the most advanced models to classical algorithmic puzzles such as:

The results showed that even small changes in problem formulation lead to significant changes in performance, suggesting worrying fragility in reasoning. As reported in the AppleInsider's coverage, "performance of all models decreases when only numerical values in the GSM-Symbolic benchmark questions are altered."

The response from the AI community was not long in coming. Alex Lawsen of Open Philanthropy, in collaboration with Claude Opus of Anthropic, published a detailed rebuttal entitled. "The Illusion of the Illusion of Thinking", challenging methodologies and conclusions of the Apple study.

When Lawsen repeated the tests with alternative methodologies - asking models to generate recursive functions instead of listing all the moves - the results were dramatically different. Models such as Claude, gemini and GPT correctly solved Tower of Hanoi problems with 15 records, far beyond the complexity where Apple reported zero successes.

Gary Marcus, a longtime critic of LLMs' reasoning skills, embraced the Apple results as confirmation of his 20-year thesis. According to Marcus, LLMs continue to struggle with "distribution shift"-the ability to generalize beyond training data-while remaining "good solvers of problems already solved."

The discussion has also spread to specialized communities such as LocalLlama on Reddit, where developers and researchers debate the practical implications for open-source models and local implementation.

This debate is not purely academic. It has direct implications for:

As highlighted in several technical insights, the need for hybrid approaches that combine:

Trivial example: an AI assistant helping with accounting. The language model understands when you ask "how much did I spend on travel this month?" and extracts the relevant parameters (category: travel, period: this month). But the SQL query that queries the database, calculating the sum and checking the fiscal constraints? That is done by deterministic code, not the neural model.

It has not escaped observers' notice that the Apple paper was released just before WWDC, raising questions about strategic motivations. As noted in the9to5Mac's analysis, "the timing of the Apple paper-just before WWDC-raised a few eyebrows. Was this a research milestone, or a strategic move to reposition Apple in the broader AI landscape?"

The debate arising from the Apple papers reminds us that we are still in the early stages of understanding artificial intelligence. As pointed out in our previous article, the distinction between simulation and authentic reasoning remains one of the most complex challenges of our time.

The real lesson is not whether or not LLMs can "reason" in the human sense of the term, but rather how we can build systems that exploit their strengths while compensating for their limitations. In a world where AI is already transforming entire sectors, the question is no longer whether these tools are "smart," but how to use them effectively and responsibly.

The future of enterprise AI will probably lie not in a single revolutionary approach, but in the intelligent orchestration of several complementary technologies. And in this scenario, the ability to critically and honestly evaluate the capabilities of our tools becomes a competitive advantage itself.

Latest Developments (January 2026)

OpenAI releases o3 and o4-mini: On April 16, 2025, OpenAI publicly released o3 and o4-mini, the most advanced reasoning models in the o series. These models can now use tools in an agentive manner, combining web search, file analysis, visual reasoning, and image generation. o3 has set new records on benchmarks such as Codeforces, SWE-bench, and MMMU, while o4-mini optimizes performance and costs for high-volume reasoning tasks. The models demonstrate "thinking with images" capabilities, visually transforming content for deeper analysis.

DeepSeek-R1 shakes up the AI industry: In January 2025, DeepSeek released R1, an open-source reasoning model that achieved performance comparable to OpenAI o1 with a training cost of only $6 million (compared to hundreds of millions for Western models). DeepSeek-R1 demonstrates that reasoning capabilities can be incentivized through pure reinforcement learning, without the need for annotated human demonstrations. The model became the #1 free app on the App Store and Google Play in dozens of countries. In January 2026, DeepSeek published an expanded 60-page paper revealing the secrets of training and candidly admitting that techniques such as Monte Carlo Tree Search (MCTS) did not work for general reasoning.

Anthropic updates Claude's "Constitution": On January 22, 2026, Anthropic published a new 23,000-word constitution for Claude, shifting from a rules-based approach to one based on understanding ethical principles. The document becomes the first framework from a major AI company to formally recognize the possibility of AI consciousness or moral status, stating that Anthropic cares about Claude's "psychological well-being, sense of self, and welfare."

The debate intensifies: A July 2025 study replicated and refined Apple's benchmarks, confirming that LRM still show cognitive limitations when complexity increases moderately (about 8 disks in the Tower of Hanoi). Researchers demonstrated that this is not only due to output constraints, but also to real cognitive limitations, highlighting that the debate is far from over.

For insights into your organization's AI strategy and implementation of robust solutions, our team of experts is available for customized consultations.